Mobile AI Services &OnDevice Consulting

Expert OnDevice AI services and consulting for mobile applications. We help you integrate Visual Language Models and other AI capabilities directly into your iOS and Android apps.

Expert OnDevice AI implementation services

Our Services

OnDevice AI Implementation

We specialize in integrating Visual Language Models and other AI capabilities directly into mobile applications with complete privacy and offline functionality.

Mobile Implementation

Custom AI integration for iOS and Android apps using Core ML, TensorFlow Lite, and ONNX Runtime.

Model Optimization

Optimize your models for mobile performance with quantization, pruning, and platform-specific optimizations.

Custom Solutions

Tailored AI solutions for computer vision, NLP, and multimodal applications on mobile devices.

Our Work

Products

Innovative multimodal AI applications showcasing our expertise in OnDevice inference and developer tools.

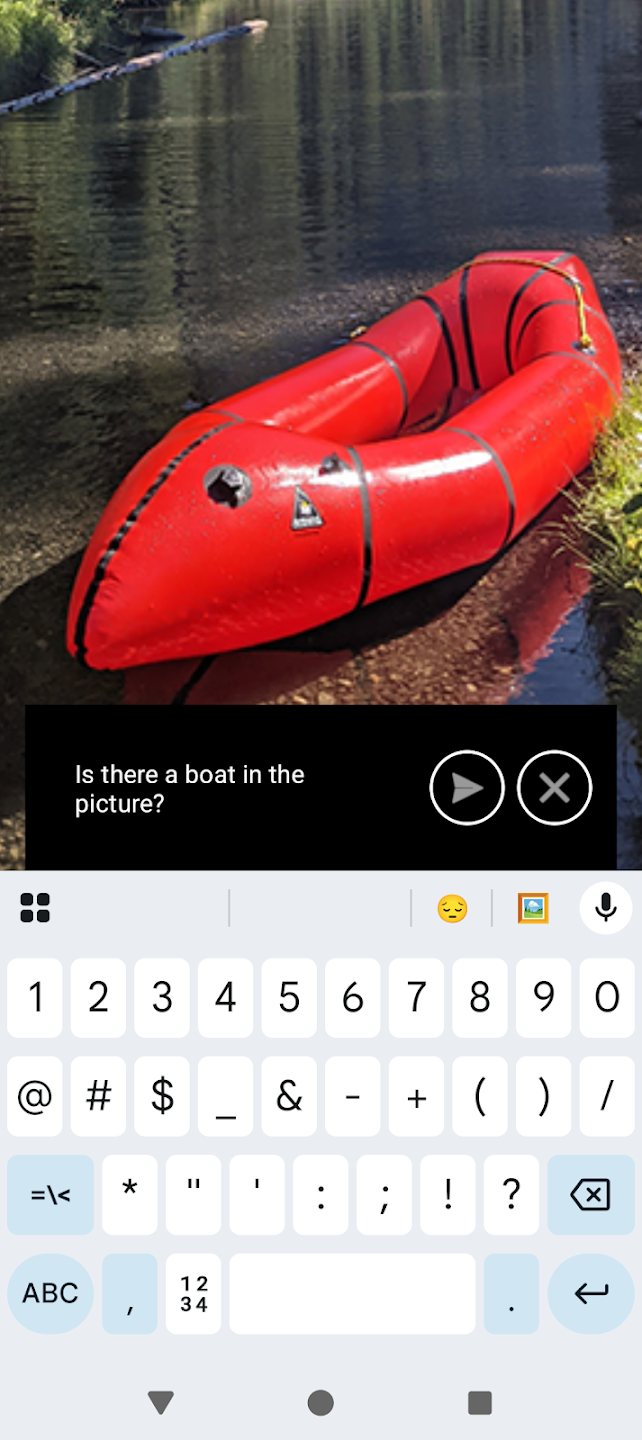

Baseweight Snap

The first app on the Google Play Store to run Visual Language Models OnDevice. Baseweight Snap showcases advanced multimodal AI with complete privacy and offline capability.

Visual Language Model

Advanced AI understanding images and text

Complete Privacy

All processing happens OnDevice

Offline Capable

Works without internet connection

* Requires flagship Android device for optimal performance

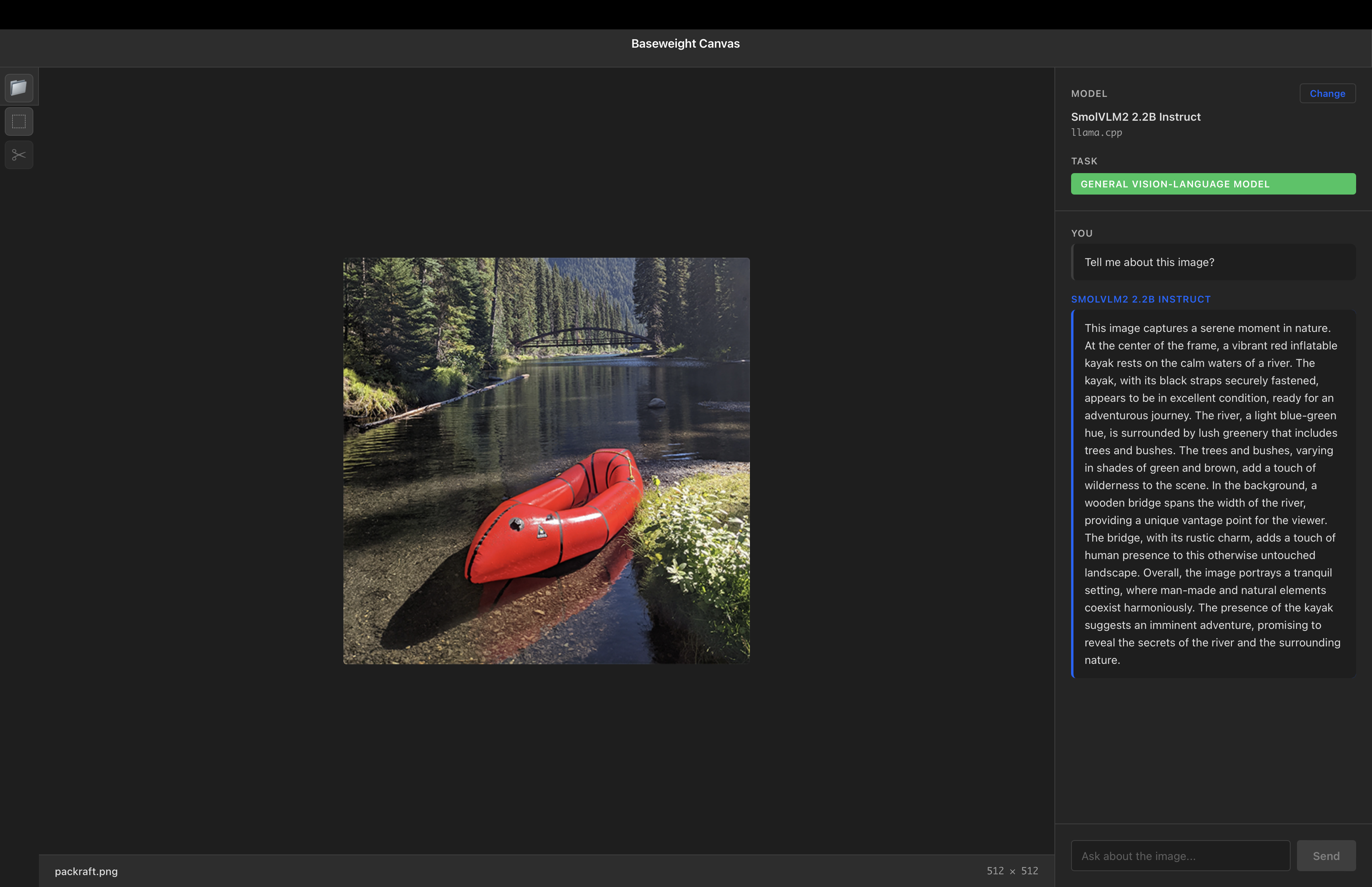

Baseweight Canvas

A desktop multimodal application designed for developers to interact with images and audio using Visual Language Models. Vision and audio first, unlike traditional LLM runners.

Multimodal AI

Image and audio processing capabilities

Local Processing

Run models on your machine

Cross-Platform

Available for Windows, Mac, and Linux

* Pre-release version available now

Our Expertise

Deep experience in mobile AI technologies and frameworks

AI Frameworks

Core ML, TensorFlow Lite, ONNX Runtime, Executorch, and custom C++ implementations.

Privacy & Security

On-device processing ensures user privacy while maintaining model security and IP protection.

Performance

Optimized inference pipelines for real-time performance on mobile hardware constraints.